Toy Projects

Integrated System

| F1tenth Autonomous Racing Car 2024 This project is based on F1tenth, an open-source platform for small-scale autonomous driving, prepared for the 15th F1tenth Autonomous Grand Prix on IEEE ICRA 2024. |

| Quadrotor’s Planning and Control 2024 This project realized the state estimation, planning, trajectory optimization, and control of a quadrotor from scratch. [Code] |

| Pick and Place Challenge 2023 First place. The project is aim at pick the static and dynamic blocks and stack them. [Recording] |

Reinforcement learning

| PPO in Continuous Control 2024 PPO and related AC algorithms for continuous control tasks. [Code] Tried tricks to improve the performance, showing the implementation matters and a few of them are valid in the walker environment. They are Advantage, State, Reward Normalization (positive); Reward Scaling (negative); Policy Entropy (negative); Learning Rate Decay (positive); Gradient clip (positive); Orthogonal Initialization (neutral); Adam Optimizer Epsilon Parameter (neutral); Tanh Activation Function (positive). |

Perception

| Mono ORB Visual Odometry 2023 A minimum python version mono visual odometry. |

| Stereo Vision 2023 Reconstructe the objects from stereo camera data. Visualization: Utilized K3D and Plotly libraries for real-time visualization of 3D reconstructions, facilitating immediate analytical insights. |

| NeRF 2023 A implementation of NeRF (Neural Radiance Field) by PyTorch for rendering novel views of objects. |

| Augmented Reality 2023 Rendered the virtual objects of the drill and the bottle in a video by first estimating the camera pose using the info on the AprilTag, using either P3P (3-point correspondence) or PnP (n-point correspondence) approach from scratch (nonOpenCV implementation). |

SLAM

| Humanoid Robot SLAM 2023 Implement particle filter SLAM in an indoor environment using information from an IMU and a LiDAR sensor. The data is collected from a humanoid robot named THOR built at Penn and UCLA. [Video about the robot] |

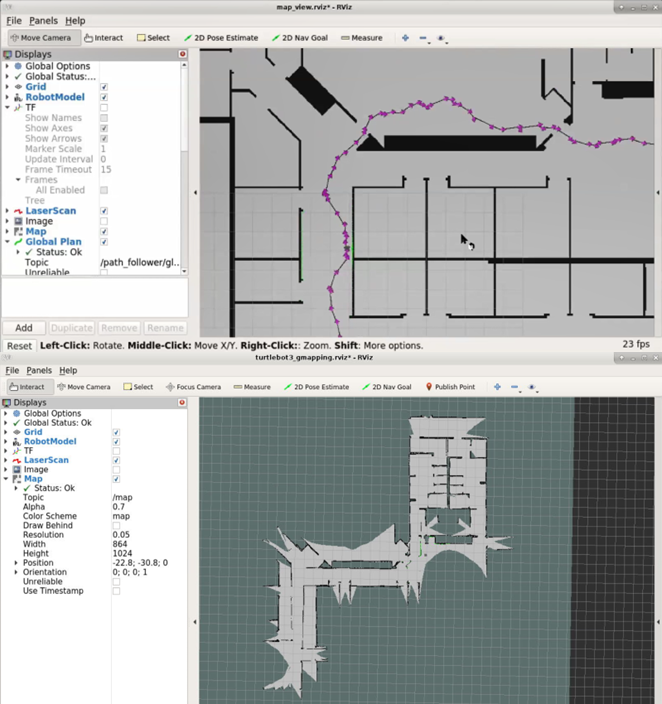

| Differential Wheeled Robot Lidar SLAM in Indoor Environment 2022 • Deployed PRM and RRT* indoors generating global path in Gazebo simulation environment. • Adapted MPC controller in dynamic state lattice following the trajectory, visualized by ROS Rviz. • Implement EKF against particle filter as localization. |

Planning

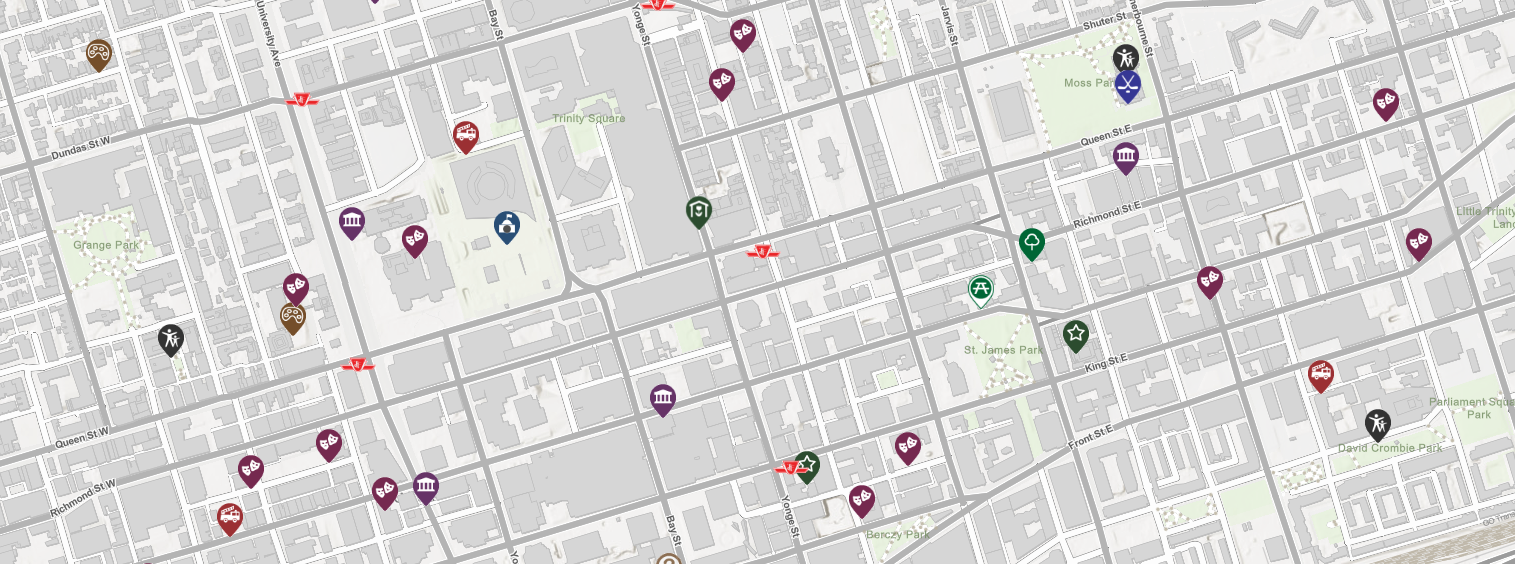

| 2D Path Planning Interface on OpenStreetMap 2022 • Implemented DFS, BFS, Greedy Best First compared with A* planning algorithm on building dense 2D map. • Improved weighted A* setting cost function based on real-time traffic and road conditions. • Developed algorithm visualization GUI interface interact with mouse clicking by JAVA. |

Control and Hardware

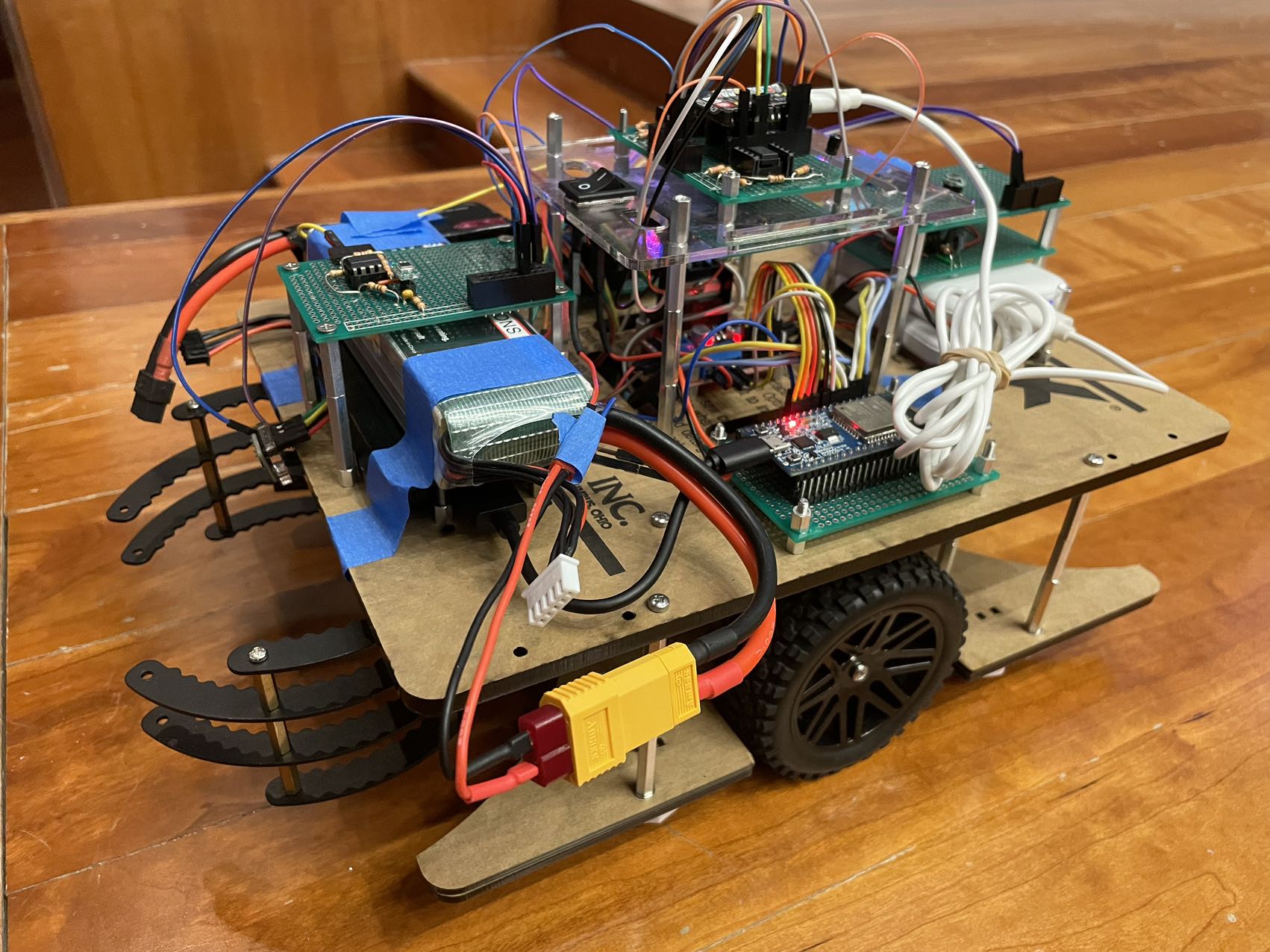

| National Engineering Practice 2021 National Silver Prize amongst over two hundred universities. After implementing an array of sophisticated control strategies for tracking and precise positioning, we discovered that a finely-tuned hard-coded speed control, especially after refining the curve trajectories, offered the most reliable performance. This experience underscored a valuable lesson: complexity isn't inherently superior; adaptability and tailoring solutions to meet specific demands are crucial. [Video] [Website] |

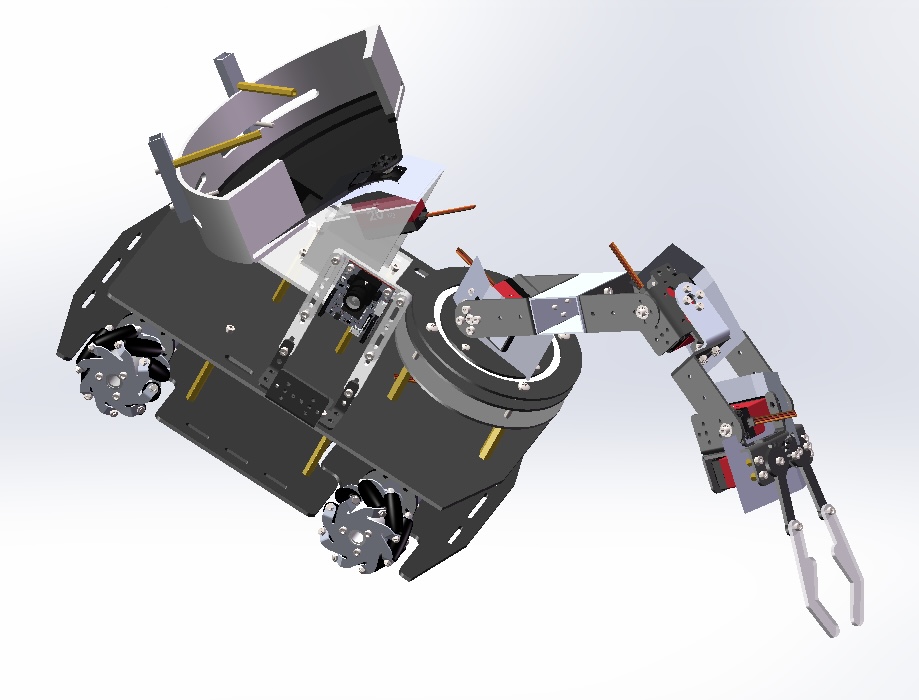

| Grant Theft Autonomous 2023 First place. A funny mechatronics competition. In this competition, operater controlled the robot without seeing the field. [GRASP Lab Youtube Channel] |

| 6-DoF Stewart Platform 2024 Because of the achievement in Grant Theft Autonomous 2023, I was invited to make the 6-DoF Stewart platform for UPenn Design School. |